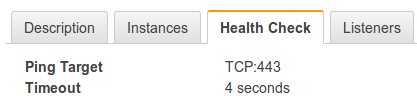

I help develop and run some web apps on AWS and recently, I was forced to learn a little more about the AWS ELB Health-Check types. Our stack consists of hosting n-application servers behind a single Elastic Load Balancer. On our application server, we run NGINX as a reverse proxy to our Ruby API and we require mutual authentication. The fact that we require mutual authentication means that we had to delegate SSL/TLS to NGINX running on our application servers, and just use the ELB as a pass through. So, we set up a TCP Health-Check to forward traffic to NGINX:

And it all seemed to work. Our ELB was balancing requests evenly between servers just as we expected. Everything was great... Until it wasn't. During a load test, one of our application servers had major issues. To be specific, on a single application server, our Ruby application had issues and it's response times continued to grow. NGINX was fine, however. This was key.

So the intended behaviour in our set up, was to direct traffic to the servers based on response times. So the servers with less latency, would get more traffic. In this scenario, where one server is responding slowly, we were hoping that AWS would route less traffic to it. We don't want any requests to be slow, but if any are, we want to minimize it.

This was not the case. A post mortem showed that each server handled roughly the same amount of traffic, even for the much slower server. Why was that the case, you ask? Because NGINX if faster than our Ruby application.

The AWS TCP Health-Check doesn't measure total request time. It measures the time to first TCP ACK, which comes from NGINX, and not our web application. So from the ELB's perspective all of our servers were healthy and responding quickly.

Actual clients, however, only care about total request time. They don't care that NGINX can open up a TCP connection quickly when our web app never actually responds.

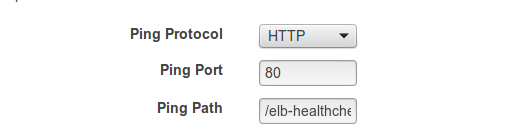

So in order for our ELB to load balance correctly, we had to switch from a TCP to a HTTP Health-Check. But we still had the issue of needing mutual authentication for all API requests.

We ended up opening up a single "Health" route not requiring mutual auth, that still routed through our Ruby application. We did this through adding a new server block in our nginx.conf:

# HTTP Health server

server {

listen 80;

location / { deny all; }

location = /elb-healthcheck {

proxy_pass http://ruby_app;

}

}

Now our NGINX process listens on port 443 for all real requests, but it also listens on port 80 for a single "/elb-healthcheck" route. You will notice that we deny all other traffic over port 80 and our Ruby application has no real functionality through the elb-healthcheck route. This allowed us to use a HTTP health check:

So beware of the AWS TCP Health-Check, because NGINX is faster than your web app.